AI systems are becoming more and more a part of our daily lives, which has led to more questions about how they make decisions. The effects of AI decisions can be huge in fields like healthcare, banking, and self-driving cars. It makes it more important than ever to be open and honest. This problem can be solved with the idea of Explainable AI (XAI), which aims to make AI's decision-making process easier to understand and use. This post will describe Explainable AI, explain why it's important, and show how it can be used to make AI choices clear, which builds trust, Accountability, and fairness.

What is Explainable AI?

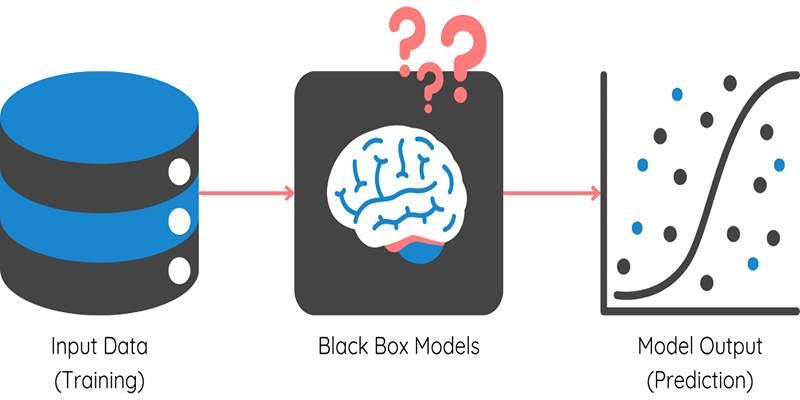

At its core, Explainable AI (XAI) refers to AI models and systems that provide clear and understandable explanations for their decisions. Unlike traditional AI models that work as “black boxes,” where the reasoning behind decisions is hidden, XAI allows users to interpret the rationale behind AI predictions.

For example, if an AI system denies a loan application or recommends a specific medical treatment, XAI would enable users—whether they’re consumers, doctors, or financial analysts—to understand the reasoning behind these outcomes. The goal is to ensure that AI systems are not only effective but also transparent and fair.

Why is Transparency in AI Decision-Making Important?

Building Trust in AI Systems

One very important reason for being transparent is that it builds trust. People must trust the choices that AI systems make, especially when those choices affect their lives. When an AI makes a suggestion or decision without giving a good reason, it's hard to trust that choice.

For example, a person who applies for a loan and is turned down by an AI-powered system might feel angry or confused. If someone was turned down, XAI can describe why, such as if it was their credit score, income, or past debt. This level of detail not only helps people understand the choice but also makes sure that AI choices are not random or unfair.

Ensuring Accountability

When AI systems are used in high-stakes environments like healthcare, criminal justice, or finance, Accountability becomes essential. If an AI makes an incorrect or biased decision, it’s critical to understand how and why it arrived at that conclusion. XAI provides transparency that can help identify flaws in the system, ensuring that any errors are traceable and can be corrected.

Take the example of an AI used in predicting recidivism in the criminal justice system. If the AI wrongly predicts a person is at high risk of re-offending, the explanation behind that prediction is vital for legal professionals to challenge the result. This type of transparency allows for greater Accountability and mitigates the risk of systemic bias.

How Does Explainable AI (XAI) Work?

To make AI systems transparent, XAI techniques are employed to explain how a model arrives at its decisions. There are two broad categories of XAI approaches: model-specific and model-agnostic methods.

Model-Specific Approaches

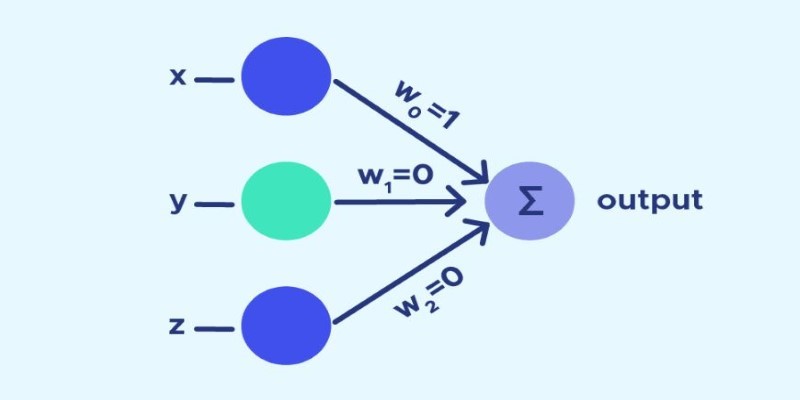

These techniques work with specific types of machine learning models. For example:

- Decision Trees are inherently interpretable, as their structure is simple and visualizable, making it easy to understand how a prediction is made.

- Linear Regression Models also provide a clear understanding of the relationships between inputs and predictions, as the model directly assigns a weight to each input feature.

However, complex models like deep learning (e.g., neural networks) are more challenging to explain. It is where model-agnostic techniques come into play.

Model-Agnostic Approaches

These approaches can be applied to any machine learning model, regardless of its structure. Some popular techniques include:

- LIME (Local Interpretable Model-agnostic Explanations): This technique explains predictions by approximating a complex model with a simpler one on a local level. It helps provide insight into individual predictions, making it easier to understand why a specific decision was made.

- SHAP (Shapley Additive exPlanations): SHAP values use concepts from game theory to assign each feature an importance score, helping explain how much each feature contributes to the final prediction.

- Partial Dependence Plots (PDP): These plots show the relationship between a feature and the predicted outcome, allowing users to understand how variations in specific features affect the model’s decision.

By utilizing these techniques, AI developers can make complex models more interpretable and transparent, improving both the model’s usability and trustworthiness.

Real-World Applications of Explainable AI

Healthcare

In the medical field, AI is being used for diagnostic purposes, such as interpreting medical images or suggesting treatment options. If an AI model incorrectly diagnoses a patient, XAI can help doctors understand why the model made that error, potentially saving lives. XAI allows healthcare providers to use AI as a tool for better-informed decisions rather than blindly relying on its recommendations.

Finance

AI in finance is used for applications such as credit scoring, fraud detection, and trading. When a loan application is rejected or a fraudulent transaction is flagged, both customers and financial institutions need to understand the reasoning behind these decisions. XAI helps explain why certain features, such as income level or transaction history, influence a decision, improving both transparency and fairness in financial services.

Autonomous Vehicles

Autonomous vehicles rely heavily on AI for decision-making, especially in complex, real-time environments. If an autonomous vehicle makes a decision that results in an accident, XAI can provide crucial insights into what the vehicle “saw” and how it interpreted the situation. It not only helps improve safety but also ensures that the vehicle’s decisions can be analyzed and understood by humans.

Conclusion

Explainable AI (XAI) is a vital development in the field of artificial intelligence, ensuring that AI systems are not only effective but also transparent and accountable. As we continue to integrate AI into key sectors such as healthcare, finance, and law enforcement, the demand for transparency will grow. XAI will help make AI’s decision-making processes more accessible, fair, and trustworthy, laying the foundation for a future where AI decisions are not just automated but also understandable and explainable.