Building intelligent systems requires accurate and reliable predictions. While a single high-performing model is desirable, real-world problems need more robust solutions. Ensemble learning addresses this by combining multiple machine learning models to create a unified system that outperforms individual models. Similar to pooling the expertise of various specialists, this technique improves accuracy and reliability. Ensemble learning has revolutionized how data scientists handle complex predictive tasks, making it an essential tool in advanced machine learning.

In this article, we'll discuss ensemble learning, why it works so well, and how it can be a potent enhancer of machine learning models' accuracy. This guide is meant to provide a clear overview for newcomers to machine learning and seasoned individuals alike.

What Is Ensemble Learning?

Ensemble learning is a method in machine learning where a collection of models referred to as base models or weak learners is combined to solve a specific problem. The philosophy behind ensemble learning is that the collective behavior of a group of diverse models could make better predictions than any individual model alone. Each member in the ensemble model may be of different strength, but with mutual compensation over other errors while improving the general correctness.

This approach is inspired by real-life scenarios. Consider voting systems in elections or decision-making processes in a team environment. A collective decision is often more reliable than an individual one because it aggregates multiple perspectives. Ensemble learning applies this same logic to machine learning by creating a system where multiple models work collaboratively to make better predictions.

There are various ensemble learning techniques, with each having a different method of combining models. Popular methods include bagging, boosting, and stacking. Although each has its differences, the underlying idea is similar: combining models to achieve better results.

Types of Ensemble Learning

To understand how ensemble learning enhances model performance, it's important to examine its key techniques. These exhibit variations in different methods of putting together base models and how those errors and biases are managed differently. The common ensemble methods of bagging, boosting, and stacking summarize these three distinct techniques.

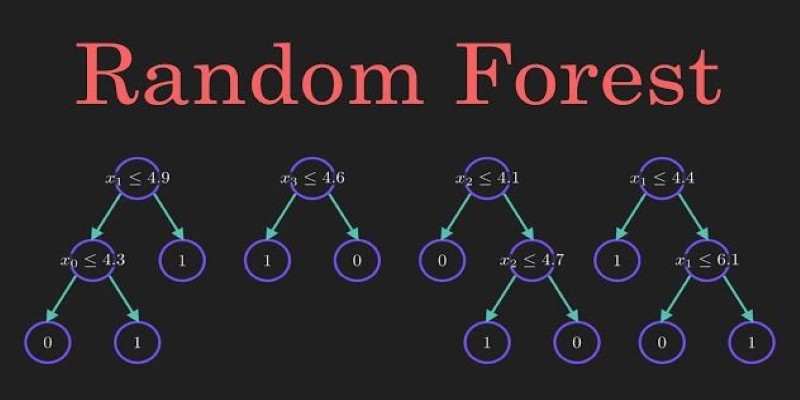

Bagging (Bootstrap Aggregating)

Bagging involves training multiple versions of a model on different subsets of the data and then averaging their predictions. The subsets are created by sampling the original dataset with replacement, meaning some data points may be used multiple times while others may be excluded. Random Forest is a well-known example of a bagging method. By averaging the outputs of multiple models, bagging reduces variance and prevents overfitting, which is a common issue in machine learning models trained on complex datasets.

Boosting

Boosting focuses on creating a sequence of models, where each subsequent model attempts to correct the errors made by its predecessor. Unlike bagging, where models are trained independently, boosting trains models sequentially, with each new model paying extra attention to the errors of the previous ones. Popular boosting algorithms include AdaBoost, Gradient Boosting, and XGBoost. Boosting tends to produce highly accurate models by minimizing both bias and variance, though it can be prone to overfitting if not properly tuned.

Stacking

Stacking takes ensemble learning a step further by combining models of different types rather than replicating the same base model. In stacking, multiple base models are trained, and their predictions are used as input to a higher-level model, often called a meta-model. The meta-model learns to make better predictions by understanding which base models perform best under different conditions. This approach can yield powerful results by leveraging the strengths of diverse models, but it also requires careful selection of base models and tuning of the meta-model.

Why Does Ensemble Learning Work?

The effectiveness of ensemble learning stems from the fact that different models tend to make different errors. When these models are combined, their errors can cancel each other out, leading to a more accurate overall prediction. This concept, known as the wisdom of the crowd, explains why diverse perspectives often produce better outcomes in real-world decision-making, and it applies equally well in machine learning.

In addition, ensemble methods reduce the risk of overfitting. Overfitting happens when a model learns the noise in the data rather than the underlying pattern, leading to poor performance on new, unseen data. By combining multiple models, ensemble learning smooths out the idiosyncrasies of individual models, resulting in better generalization to new data.

Moreover, ensemble learning provides flexibility. Since it allows the use of different types of models in a single system, data scientists can experiment with various algorithms and architectures, ensuring that the ensemble leverages the best characteristics of each model.

Applications of Ensemble Learning

Ensemble learning is widely used across industries, primarily because it improves predictive accuracy and reliability. In fields such as healthcare, finance, and marketing, where accurate predictions are critical, ensemble learning often leads to superior results.

In healthcare, for example, ensemble models can help in disease prediction by combining multiple diagnostic algorithms, each trained on different types of medical data. This approach ensures that the model is robust and less prone to errors caused by the limitations of individual algorithms.

In finance, ensemble learning is applied in risk assessment, fraud detection, and algorithmic trading. By aggregating predictions from different models trained on various market indicators, financial institutions can make more reliable decisions.

Marketing is another domain where ensemble models excel. By combining customer behavior models, companies can predict customer preferences more accurately, leading to better-targeted campaigns and improved customer satisfaction.

Conclusion

Ensemble learning combines multiple models to enhance predictive accuracy and reduce errors, making it a cornerstone of modern machine learning. By leveraging diverse models and methods like bagging, boosting, and stacking, ensemble techniques improve generalization and mitigate issues like overfitting. This approach mirrors real-world decision-making, where combining multiple perspectives yields better results. Whether in healthcare, finance, or marketing, ensemble learning offers robust solutions to complex problems, ensuring higher reliability and precision. Understanding and applying ensemble methods can significantly boost the performance of machine learning models, making them more effective for real-world applications.